Chapter 2

Molecular dynamics

Context: Molecular dynamics follows the motion of individual atoms through a solution of Newton’s equations of motion. We need integration algorithms to be able to solve this set of coupled differential equations on a computer.

Additional resources:

- Chapter 3 of Allen & Tildesley, Computer Simulation of Liquids

- Appendix ?? on dynamical systems.

2.1 Equations of motion

2.1.1 Newton’s equations of motion

We have already (almost) all the ingredients to carry out a molecular dynamics simulation. From our or potential energy expression \(E_{\text {pot}}(\{\vec {r}_i\})\) discussed in the previous chapter, we obtain the force \begin {equation} \vec {f}_i = -\partial E_{\text {pot}}/\partial \vec {r}_i \end {equation} on each of the \(N\) atoms. Once we know the forces, we can obtain the accelerations \(\vec {a}_i\) through Newton’s third law, \begin {equation} \vec {f}_i = m_i \vec {a}_i. \end {equation} We are therefore assuming that atom \(i\) can be described as a point of mass \(m_i\)! The mass can be obtained from the periodic table of elements. Note that the mass listed in the periodic table is usually the average over all isotopes weighted by their occurrence on earth, and this mass is used for most practical purposes. For some application, in particular to understand the different behavior of Hydrogen and Deuterium, it can be necessary to actually model the individual isotopes by using their respective mass.

We further have \(\vec {a}_i = \dot {\vec {v}}_i\), where \(\vec {v}_i\) is the velocity of atom \(i\), and \(\vec {v}_i = \dot {\vec {r}}_i\). The dot superscript indicates derivative with respect to time. The set of linear differential equations to solve is therefore \begin {equation} \dot {v}_i(t) = \vec {f}_i(t)/m_i\ {\text { and} }\ \dot {\vec {r}}_i(t) = \vec {v}_i(t) \label {eq:Newton} \end {equation} with the initial (boundary) conditions \(\vec {r}_i(0) = \vec {r}_0\) and \(\vec {v}_i(0) = \vec {v}_0\). Note that the boundary condition is an integral part of the differential Eq. \eqref{eq:Newton}. The state of the system is therefore fully and uniquely determined by the positions \(\vec {r}_i\) and the velocities \(\vec {v}_i\) of all atoms. This set of positions \(\vec {r}_i\) and momenta \(\vec {p}_i = \vec {v}_i/m_i\) defines a point in phase-space \(\vec {\Gamma } = \{ \vec {r}_i, \vec {p}_i\}\). The evolution of position and velocities given by Eq. \eqref{eq:Newton} can therefore be thought of as a single point moving in the \(6N\) dimensional phase-space.

Code example: For a molecular dynamics code, it is useful to have a data structure that represents the state of the simulation and stores at least positions and velocities. This data structure could also store element names (or atomic numbers), masses and forces. An example that uses Eigen arrays as the basic array container is shown below. As a general rule, the data structure should be designed in a way that data that is processed consecutively is also stored in memory in a contiguous manner. This ensures predictable memory access patterns and efficient caching of the data necessary for computation. Instead of the Atoms container described below, we could be tempted to create a class Atom that contains the positions, velocities, etc. of a single atom and then use an array (e.g. std::vector<Atom>) of that class as the basic data structure. However, positions are then no longer consecutive in memory: they are interlaced with other atomic data. A function (e.g. computing forces) that does not need the velocities would still load them into the cache, causing more data transfers between cache and RAM, lowering performance. For high-performance numerical code, it is therefore always preferable to use structures of arrays rather than arrays of structures.

1// Type aliases 2using Positions_t = Eigen::Array3Xd; 3using Velocities_t = Eigen::Array3Xd; 4using Forces_t = Eigen::Array3Xd; 5 6struct Atoms { 7 Positions_t positions; 8 Velocities_t velocities; 9 Forces_t forces; 10 11 Atoms(Positions_t &p) 12 : positions{p}, 13 velocities{3, p.cols()}, 14 forces{3, p.cols()} { 15 velocities.setZero(); 16 forces.setZero(); 17 } 18 19 size_t nb_atoms() const { 20 return positions.cols(); 21 } 22};

2.1.2 Kinetic energy and energy conservation

In addition to the potential energy \(E_{\text {pot}}(\{ \vec {r}_i\})\), the dynamical state of a system is characterized by its kinetic energy, \begin {equation} E_{\text {kin}}(\{ \vec {p}_i\}) = \sum _i \frac {1}{2} \frac {p_i^2}{m_i}. \end {equation}

Note: The temperature is simply a measure of the kinetic energy of the system, \(\frac {3}{2} N k_B T = E_{\text {kin}}\) where \(N\) is the number of atoms. In other words, \(E_{\text {kin}}\) measures the variance of the velocity distribution, which is Gaussian. We will learn more about this when discussing the basics of statistical mechanics.

The total energy \begin {equation} H(\{ \vec {r}_i\},\{ \vec {p}_i\}) = E_{\text {kin}}(\{ \vec {p}_i\}) + E_{\text {pot}}(\{ \vec {r}_i\}) \label {eq:hamil} \end {equation} is a conserved quantity during the motion of the atoms. This can be seen by showing that the derivative of the total energy with respect to time vanishes, \begin {equation} \dot {H} = \dot {E}_{\text {kin}} + \dot {E}_{\text {pot}} = \sum _i \frac {\vec {p}_i \dot {\vec {p}}_i}{m_i} + \sum _i \frac {\partial E_{\text {pot}}}{\partial \vec {r}_i} \dot {\vec {r_i}} = \sum _i \vec {v}_i \vec {f}_i - \sum _i \vec {v}_i \vec {f}_i = 0. \end {equation} \(H\) is also called the Hamiltonian of the system.

Note: Measuring the total energy (or any other conserved quantity!) and checking whether it is constant in a molecular dynamics simulation is a way of testing if the time step \(\Delta t\) used in the numerical integration is small enough. We will discuss numerical integration in detail below.

A generalization of Newton’s equations of motion are Hamilton’s equations of motion, \begin {align} \dot {\vec {r}}_i &= \frac {\partial H}{\partial \vec {p}_i} \\ \dot {\vec {p}}_i &= -\frac {\partial H}{\partial \vec {r}_i}, \end {align}

and it is straightforward to show that these equations reduce to Newton’s equations of motions for the Hamiltonian given by Eq. \eqref{eq:hamil}. Hamilton’s equation of motion remain valid when positions \(\vec {r}_i\) and momenta \(\vec {p}_i\) are replaced by generalized coordinates that consider constraints, such as for example the angle of a (rigid) pendulum. These equations will become important when we discuss statistical mechanics and temperature control in molecular dynamics simulations using thermostats, where a generalized degree of freedom is the internal state of the heat bath that controls the temperature.

2.2 Integration algorithms

The main ingredient in any molecular dynamics simulation, regardless of the underlying model, is the numerical solution of Eqs. \eqref{eq:Newton}. A plethora of algorithms have been developed over the years, but for most practical purposes the Velocity-Verlet algorithm is used nowadays. For instructive purposes we will start out with a simple integration method, the Euler integration, before discussing Velocity-Verlet.

2.2.1 Euler integration

In order to follow the trajectories of all atoms, we need to integrate the above differential equation. On a computer, a continuous differential equation needs to be replaced by a discrete equation. Equations \eqref{eq:Newton} are continuous in time and hence need to be discretized. (Note that our system is already discrete spatially since we are dealing with mass points, but each of these points corresponds to a physical object, so this is not the result of a discretization procedure.) The simplest numerical integration scheme is the forward Euler algorithm, in which forces and velocities are assumed to be constant over time intervals \(\Delta t\).

To see this, we write the above differential equation as \begin {equation} d \vec {v}_i = \frac {\vec {f}_i(t)}{m_i}\,dt\ {\text { and} }\ d\vec {r}_i(t) = \vec {v}_i(t)\,dt \end {equation} i.e., we move the differential \(d t\) of \(\dot {\vec {v}}_i = d\vec {v}/d t\) to the right hand side of the equation. We can now straightforwardly integrate the equation from time \(t\) to time \(t + \Delta t\) while assuming that \(\vec {f}_i\) and \(\vec {v}_i\) remain constant. This yields \begin {align} \vec {v}_i(t+\Delta t) - \vec {v}_i(t) &= \frac {\vec {f}_i(t)}{m_i} \Delta t \label {eq:eulerexplicita} \\ \vec {r}_i(t+\Delta t) - \vec {r}_i(t) &= \vec {v}_i(t) \Delta t \label {eq:eulerexplicitb} \end {align}

which is obviously only a good approximation for small \(\Delta t\)! This algorithm is called Euler integration.

The same equation can be derived by Taylor-expanding \(\vec {r}_i(t+\Delta t)\) up to first order in \(\Delta t\). The integration error of this algorithm is hence \(O(\Delta t^2)\). The Euler algorithm is not reversible, i.e. starting from time \(t+\Delta t\) and integrating backwards one ends up with a different result at time \(t\). Applying the Euler algorithm with timestep \(-\Delta t\) gives \begin {align} \vec {v}_i(t) - \vec {v}_i(t+\Delta t) &= -\frac {\vec {f}_i(t+\Delta t)}{m_i} \Delta t \\ \vec {r}_i(t) - \vec {r}_i(t+\Delta t) &= -\vec {v}_i(t+\Delta t) \Delta t \end {align}

These equations cannot be re-arranged to give Eqs. \eqref{eq:eulerexplicita} and \eqref{eq:eulerexplicitb}. Forward Euler integration is generally not a good algorithm and requires very small time steps.

2.2.2 Leap-frog integration

Leap-frog assumes positions are defined at times \(t_i\) and velocities at times \(t_i+\Delta t/2\), and can be derived from an argument similar to the one given above. Specifically, we combine the results of a Taylor expansion \(\pm \Delta t/2\), yielding \begin {align} \vec {v}_i(t+\Delta t/2) - \vec {v}_i(t-\Delta t/2) &= \frac {\vec {f}_i(t)}{m_i} \Delta t \label {eq:leapfrog1} \\ \vec {r}_i(t+\Delta t) - \vec {r}_i(t) &= \vec {v}_i(t+\Delta t/2) \Delta t. \end {align}

Note that Eq. \eqref{eq:leapfrog1} is similar to Eq. \eqref{eq:eulerexplicita}, except the force is evaluated at the mid-point. The resulting algorithm is reversible. Applying the Leap-frog algorithm with timestep \(-\Delta t\) gives \begin {align} \vec {v}_i(t-\Delta t/2) - \vec {v}_i(t+\Delta t/2) &= -\frac {\vec {f}_i(t)}{m_i} \Delta t \\ \vec {r}_i(t) - \vec {r}_i(t+\Delta t) &= -\vec {v}_i(t+\Delta t/2) \Delta t \end {align}

Bring the terms on the left hand side to the right and vice-versa, and you arrive at the original equations for forward integration. Leap-frog is therefore reversible.

2.2.3 Verlet integration

Let us now Taylor expand \(\vec {r}_i(t\pm \Delta t)\) up to third order in \(\pm \Delta t\), \begin {equation} \label {eq:taylor_tplus} \vec {r}_i(t\pm \Delta t) = \vec {r}_i(t) \pm \vec {v}_i(t) \Delta t + \frac {1}{2m_i} \vec {f}_i(t) \Delta t^2 \pm \frac {1}{6} \dot {\dot {\dot {\vec {r}}}}_i(t) \Delta t^3 + O(\Delta t^4). \end {equation} Note that only the odd exponents see the sign of \(\pm \Delta t\). The sum of this equation for expansion in \(+\Delta t\) and \(-\Delta t\) gives the positions update, \begin {equation} \vec {r}_i(t+\Delta t) + \vec {r}_i(t-\Delta t) = 2\vec {r}_i(t) + \frac {1}{m_i} \vec {f}_i(t) \Delta t^2 + O(\Delta t^4). \label {eq:verlet} \end {equation} Eq. \eqref{eq:verlet} is called the Verlet algorithm. Instead of requiring the positions \(\{ \vec {r}_i(t)\}\) and velocities \(\{ \vec {v}_i(t)\}\) it requires the positions of the current \(\{ \vec {r}_i(t)\}\) and past \(\{ \vec {r}_i(t-\Delta t)\}\) times for the integration.

The difference between the expansion for \(+\Delta t\) and \(-\Delta t\) yields the velocities, \begin {equation} \vec {r}_i(t+\Delta t) - \vec {r}_i(t-\Delta t) = 2\vec {v}_i(t) \Delta t + O(\Delta t^3). \end {equation} Note that in order to compute the velocities at time t in the regular Verlet algorithm, we need to know the positions at time \(t + \Delta t\). Verlet and Leap-Frog are identical algorithms, since Leap-Frog stores the velocities at the intermediate time \(t+\Delta t/2\). It is usually useful to be able to know both, positions and velocities, at time \(t\). This problem is solved by the Velocity-Verlet algorithm, described in the following section.

2.2.4 Velocity-Verlet integration

Let us now also Taylor expand \(\vec {r}_i(t)\) up to third order in \(\Delta t\) at \(\vec {r}_i(t+\Delta t)\), i.e. we integrate backwards in time from \(t + \Delta t\) to \(t\). This gives \begin {equation} \vec {r}_i(t) = \vec {r}_i(t+\Delta t) - \vec {v}_i(t+\Delta t) \Delta t + \frac {1}{2m_i} \vec {f}_i(t+\Delta t) \Delta t^2 - \frac {1}{6} \dot {\dot {\dot {\vec {r}}}}_i(t) \Delta t^3 + O(\Delta t^3) \label {eq:taylor_r} \end {equation} Equation \eqref{eq:taylor˙tplus} is the positions update of the Velocity-Verlet algorithm. The sum of Eq. \eqref{eq:taylor˙tplus} and Eq. \eqref{eq:taylor˙r} gives the velocity update in the Velocity-Verlet algorithm: \begin {align} \vec {r}_i(t+\Delta t) &= \vec {r}_i(t) + \vec {v}_i(t)\Delta t + \frac {1}{2m_i} \vec {f}_i(t) \Delta t^2\\ \vec {v}_i(t+\Delta t) &= \vec {v}_i(t) + \frac {1}{2m_i} \left (\vec {f}_i(t) + \vec {f}_i(t+\Delta t) \right ) \Delta t, \end {align}

Note that this algorithm is often split in the form of a predictor-corrector scheme since this saves computation time and the necessity to keep past forces around. The predictor step is \begin {align} \vec {v}_i(t+\Delta t/2) &= \vec {v}_i(t) + \frac {1}{2m_i} \vec {f}_i(t) \Delta t \label {eq:vvpred1} \\ \vec {r}_i(t+\Delta t) &= \vec {r}_i(t) + \vec {v}_i(t+\Delta t/2) \Delta t \label {eq:vvpred2} \end {align}

where \(\vec {v}_i(t+\Delta t/2)\) is the predicted velocity. After this we compute new forces, \(\vec {f}_i(t+\Delta t)\). We then correct the velocities via \begin {equation} \vec {v}_i(t+\Delta t) = \vec {v}_i(t+\Delta t/2) + \frac {1}{2m_i} \vec {f}_i(t+\Delta t) \Delta t \end {equation} The Velocity-Verlet algorithm is the integration algorithm used in most molecular dynamics codes. It has the additional properties that is it symplectic, which means it conserves phase-space volume. We will come back to what this mean when talking about statistical mechanics.

Code example: We can implement the velocity-verlet algorithm in a few lines of C++ code using vectorized Eigen operations. The prediction step

1void verlet_step1(Atoms &atoms, double timestep, 2 double mass) { 3 atoms.velocities += 0.5 * atoms.forces * timestep / mass; 4 atoms.positions += atoms.velocities * timestep; 5}

implements Eq. \eqref{eq:vvpred1}. We then compute new forces and correct the velocities via

1void verlet_step2(Atoms &atoms, double timestep, 2 double mass) { 3 atoms.velocities += 0.5 * atoms.forces * timestep / mass; 4}

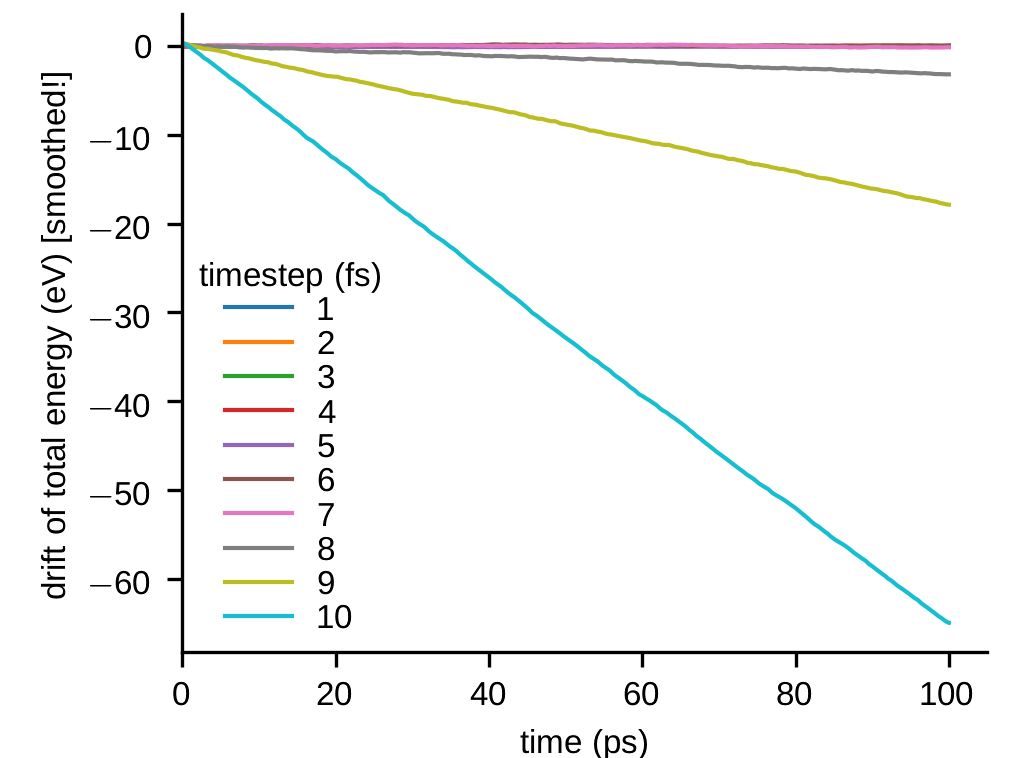

Note: The timestep in MD simulations has to be on the order of femtoseconds, in order to resolve the fastest atomic vibrations. For example, in simulations with metals and Embedded Atom Method (EAM) potentials, \(\Delta t=1\) fs is typically a safe choice. How can we check that the timestep is sensible? One possibility is to simply let a configuration in time using the Velocity-Verlet algorithm. This is sometimes called the micro-canonical or NVE ensemble. (NVE because number of atoms, volume and energy is constant.) We then record the evolution of the total (kinetic plus potential) energy, which should be constant. Due to the approximations described above, discrete time integration schemes introduce numerical errors. If \(\Delta t\) is larger than a critical value, the integration error grows unstable and causes a noticeable drift of the total energy. The figures below show the results of such a simulation. A system of \(108000\) Au atoms was simulated for \(100\) ps with various values of \(\Delta t\). The \(y\)-axis shows the difference between the current and initial values of the total energy. The data was smoothened to suppress high-frequency fluctuations in the figure. For this system, even \(5\) fs would still be an acceptable time step.